The first few articles of the Circuits project will be focused on early vision in InceptionV1

Over the course of these layers, we see the network go from raw pixels up to sophisticated boundary detection, basic shape detection (eg. curves, circles, spirals, triangles), eye detectors, and even crude detectors for very small heads. Along the way, we see a variety of interesting intermediate features, including Complex Gabor detectors (similar to some classic “complex cells” of neuroscience), black and white vs color detectors, and small circle formation from curves.

Studying early vision has two major advantages as a starting point in our investigation.

Firstly, it’s particularly easy to study:

it’s close to the input, the circuits are only a few layers deep, there aren’t that many different neurons,

Before we dive into detailed explorations of different parts of early vision, we wanted to give a broader overview of how we presently understand it. This article sketches out our understanding, as an annotated collection of what we call “neuron groups.” We also provide illustrations of selected circuits at each layer.

By limiting ourselves to early vision, this article “only” considers the first 1,056 neurons of InceptionV1.

Playing Cards with Neurons

Dmitri Mendeleev is often accounted to have discovered the Periodic Table by playing “chemical solitaire,” writing the details of each element on a card and patiently fiddling with different ways of classifying and organizing them. Some modern historians are skeptical about the cards, but Mendeleev’s story is a compelling demonstration of that there can be a lot of value in simply organizing phenomena, even when you don’t have a theory or firm justification for that organization yet. Mendeleev is far from unique in this. For example, in biology, taxonomies of species preceded genetics and the theory of evolution giving them a theoretical foundation.

Our experience is that many neurons in vision models seem to fall into families of similar features. For example, it’s not unusual to see a dozen neurons detecting the same feature in different orientations or colors. Perhaps even more strikingly, the same “neuron families” seem to recur across models! Of course, it’s well known that Gabor filters and color contrast detectors reliably comprise neurons in the first layer of convolutional neural networks, but we were quite surprised to see this generalize to later layers.

This article shares our working categorization of units in the first five layers of InceptionV1 into neuron families. These families are ad-hoc, human defined collections of features that seem to be similar in some way. We’ve found these helpful for communicating among ourselves and breaking the problem of understanding InceptionV1 into smaller chunks. While there are some families we suspect are “real”, many others are categories of convenience, or categories we have low-confidence about. The main goal of these families is to help researchers orient themselves.

In constructing this categorization, our understanding of individual neurons was developed by looking at feature visualizations, dataset examples, how a feature is built from the previous layer, how it is used by the next layer, and other analysis. It’s worth noting that the level of attention we’ve given to individual neurons varies greatly: we’ve dedicated entire forthcoming articles to detailed analysis some of these units, while many others have only received a few minutes of cursory investigation.

In some ways, our categorization of units is similar to Net Dissect mixed3b we see many units which appear from dataset examples likely to be familiar feature types like curve detectors, divot detectors, boundary detectors, eye detector, and so forth, but are classified as weakly correlated with another feature — often objects that it seems unlikely could be detected at such an early layer.

Or in another fun case, there is a feature (372) which is most correlated with a cat detector, but appears to be detecting left-oriented whiskers!

Caveats

- This is a broad overview and our understanding of many of these units is low-confidence. We fully expect, in retrospect, to realize we misunderstood some units and categories.

- Many neuron groups are catch-all categories or convenient organizational categories that we don’t think reflect fundamental structure.

- Even for neuron groups we suspect do reflect a fundamental structure (eg. some can be recovered from factorizing the layer’s weight matrices) the boundaries of these groups can be blurry and some neurons inclusion involve judgement calls.

Presentation of Neurons

In order to talk about neurons, we need to somehow represent them.

While we could use neuron indices, it’s very hard to keep hundreds of numbers straight in one’s head.

Instead, we use feature visualizations

When we represent a neuron with a feature visualization, we don’t intend to claim that the feature visualization captures the entirety of the neuron’s behavior.

Rather, the role of a feature visualization is like a variable name in understanding a program.

It replaces an arbitrary number with a more meaningful symbol

Presentation of Circuits

Although this article is focused on giving an overview of the features which exist in early vision, we’re also interested in understanding how they’re computed from earlier features.

To do this, we present circuits consisting of a neuron, the units it has the strongest (L2 norm) weights to in the previous layer, and the weights between them.mixed3a and mixed3b are in branches consisting of a “bottleneck” 1x1 conv that greatly reduces the number of channels followed by a 5x5 conv. Although there is a ReLU between them, we generally think of them as a low rank factorization of a single weight matrix and visualize the product of the two weights. Additionally, some neurons in these layers are in a branch consisting of maxpooling followed by a 1x1 conv; we present these units as their weights replicated over the region of their maxpooling.

For example, here is a circuit of a circle detecting unit in mixed3a being assembled from earlier curves and a primitive circle detector.

We’ll discuss this example in more depth later.

At any point, you can click on a neuron’s feature visualization to see its weights to the 50 neurons in the previous layer it is most connected to (that is, how it assembled from the previous layer, and also the 50 neurons in the next layer it is most connected to (that is, how it is used going forward). This allows further investigation, and gives you an unbiased view of the weights if you’re concerned about cherry-picking.

conv2d0

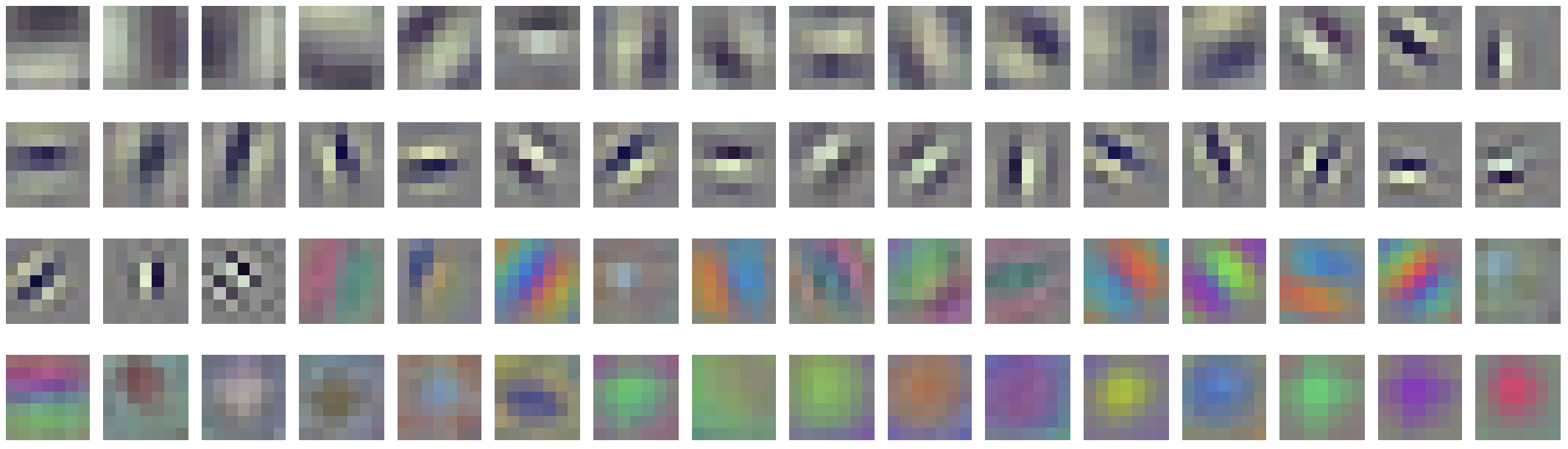

The first conv layer of every vision model we’ve looked at is mostly comprised of two kinds of features: color-contrast detectors and Gabor filters.

InceptionV1′s conv2d0 is no exception to this rule, and most of its units fall into these categories.

In contrast to other models, however, the features aren’t perfect color contrast detectors and Gabor filters.

For lack of a better word, they’re messy.

We have no way of knowing, but it seems likely this is a result of the gradient not reaching the early layers very well during training.

Note that InceptionV1 predated the adoption of modern techniques like batch norm and Adam, which make it much easier to train deep models well.

If we compare to the TF-Slim

One subtlety that’s worth noting here is that Gabor filters almost always come in pairs of weights which are negative versions of each other, both in InceptionV1 and other vision models. A single Gabor filter can only detect edges at some offsets, but the negative version fills in holes, allowing for the formation of complex Gabor filters in the next layer.

conv2d1

In conv2d1, we begin to see some of the classic complex cell features of visual neuroscience.

These neurons respond to similar patterns to units in conv2d0, but are invariant to some changes in position and orientation.

Complex Gabors: A nice example of this is the “Complex Gabor” feature family. Like simple Gabor filters, complex Gabors detect edges. But unlike simple Gabors, they are relatively invariant to the exact position of the edge or which side is dark or light. This is achieved by being excited by multiple Gabor filters in similar orientations — and most critically, by being excited by “reciprocal Gabor filters” that detect the same pattern with dark and light switched. This can be seen as an early example of the “union over cases” motif.

Note that conv2d1 is a 1x1 convolution, so there’s only a single weight — a single line, in this diagram — between each channel in the previous and this one.

In addition to Complex Gabors, we see a variety of other features, including more invariant color contrast detectors, Gabor-like features that are less selective for a single orientation, and lower-frequency features.

conv2d0) and later color contrast (conv2d2, mixed3a, mixed3b).conv2d0, which makes it hard to reason about.conv2d2

In conv2d2 we see the emergence of very simple shape predecessors.

This layer sees the first units that might be described as “line detectors”, preferring a single longer line to a Gabor pattern and accounting for about 25% of units.

We also see tiny curve detectors, corner detectors, divergence detectors, and a single very tiny circle detector.

One fun aspect of these features is that you can see that they are assembled from Gabor detectors in the feature visualizations, with curves being built from small piecewise Gabor segments.

All of these units still moderately fire in response to incomplete versions of their feature, such as a small curve running tangent to the edge detector.

Since conv2d2 is a 3x3 convolution, our understanding of these shape precursor features (and some texture features) maps to particular ways Gabor and lower-frequency edges are being spatially assembled into new features.

At a high-level, we see a few primary patterns:

We also begin to see various kinds of texture and color detectors start to become a major constituent of the layer, including color-contrast and color center surround features, as well as Gabor-like, hatch, low-frequency and high-frequency textures. A handful of units look for different textures on different sides of their receptive field.

conv2d0, conv2d1) and later color contrast (mixed3a, mixed3b).mixed3a).mixed3a).mixed3a) and Color Center-Surround (mixed3b).mixed3a) and curves (mixed3b). See also circuit example and discussion of use in forming small circles/eyes (mixed3a).mixed3a) and brightness gradients (mixed3b).mixed3a

mixed3a has a significant increase in the diversity of features we observe.

Some of them — curve detectors and high-low frequency detectors — were discussed in Zoom In

and will be discussed again in later articles in great detail.

But there are some really interesting circuits in mixed3a which we haven’t discussed before,

and we’ll go through a couple selected ones to give a flavor of what happens at this layer.

Black & White Detectors: One interesting property of mixed3a is the emergence of “black and white” detectors, which detect the absence of color.

Prior to mixed3a, color contrast detectors look for transitions of a color to near complementary colors (eg. blue vs yellow).

From this layer on, however, we’ll often see color detectors which compare a color to the absence of color.

Additionally, black and white detectors can allow the detection of greyscale images, which may be correlated with ImageNet categories (see

4a:479

which detects black and white portraits).

The circuit for our black and white detector is quite simple:

almost all of its large weights are negative, detecting the absence of colors.

Roughly, it computes NOT(color_feature_1 OR color_feature_2 OR ...).

Small Circle Detector: We also see somewhat more complex shapes in mixed3a. Of course, curves (which we discussed in Zoom In) are a prominent example of this.

But there’s lots of other interesting examples.

For instance, we see a variety of small circle and eye detectors form

by piecing together early curves and circle detectors (conv2d2):

Triangle Detectors: While on the matter of basic shapes, we also see triangle detectors

form from earlier line (conv2d2) and shifted line (conv2d2) detectors.

However, in practice, these triangle detectors (and other angle units) seem to often just be used as multi-edge detectors downstream, or in conjunction with many other units to detect convex boundaries.

The selected circuits discussed above only scratch the surface of the intricate structure in mixed3a.

Below, we provide a taxonomized overview of all of them:

conv2d2) and later Color Center-Surround (mixed3b).mixed3b) as an additional cue for a boundary between objects. (Larger scale high-low frequency detectors can be found in mixed4a (245, 93, 392, 301), but are not discussed in this article.) A detailed article on these is forthcoming.

conv2d2) and later brightness gradients (mixed3b).conv2d0, conv2d1, conv2d2) and later color contrast (mixed3b).conv2d2) and later curves (mixed3b). See the full paper on curve detectors.

mixed3b). See also circuit example and discussion.mixed3b

mixed3b straddles two levels of abstraction.

On the one hand, it has some quite sophisticated features that don’t really seem like they should be characterized as “early” or “low-level”: object boundary detectors, early head detectors, and more sophisticated part of shape detectors.

On the other hand, it also has many units that still feel quite low-level, such as color center-surround units.

Boundary detectors: One of the most striking transitions in mixed3b is the formation of boundary detectors.

When you first look at the feature visualizations and dataset examples,

you might think these are just another iteration of edge or curve detectors.

But they are in fact combining a variety of cues to detect boundaries and transitions between objects.

Perhaps the most important one is the high-low frequency detectors we saw develop at the previous layer.

Notice that it largely doesn’t care which direction the change in color or frequency is, just that there’s a change.

We sometimes find it useful to think about the “goal” of early vision.

Gradient descent will only create features if they are useful for features in later layers.

Which later features incentivized the creation of the features we see in early vision?

These boundary detectors seem to be the “goal” of the high-low frequency detectors (mixed3a) we saw in the previous layer.

Curve-based Features: Another major theme in this layer is the emergence of more complex and specific shape detectors based on curves. These include more sophisticated curves, circles, S-shapes, spirals, divots, and “evolutes” (a term we’ve repurposed to describe units detecting curves facing away from the middle). We’ll discuss these in detail in a forthcoming article on curve circuits, but they warrant mention here.

Conceptually, you can think of the weights as piecing together curve detectors as something like this:

Fur detectors: Another interesting (albeit, probably quite specific to the dog focus of ImageNet)

circuit is the implementation of “oriented fur detectors” which detect fur parting, like hair on one’s head.

They’re implemented by piecing together fur precursors (mixed3a) so that they converge in a particular way.

Again, these circuits only scratch the surface of mixed3b.

Since it’s a larger layer with lots of families, we’ll go through a couple particularly interesting and well understood families first:

See the full paper on curve detectors.

conv2d2) and brightness gradients (mixed3a).

In addition to the above features, are also a lot of other features which don’t fall into such a neat categorization.

One frustrating issue is that mixed3b has many units that don’t have a simple low-level articulation, but also are not yet very specific to a high-level feature.

For example, there are units which seem to be developing towards detecting certain animal body parts, but still respond to many other stimuli as well and so are difficult to describe.

mixed3a. Compare to earlier Color Center-Surround (conv2d2) and (Color Center-Surround mixed3a).conv2d0, conv2d1, conv2d2, mixed3a).Conclusion

The goal of this essay was to give a high-level overview of our present understanding of early vision in InceptionV1. Every single feature discussed in this article is a potential topic of deep investigation. For example, are curve detectors really curve detectors? What types of curves do they fire for? How do they behave on various edge cases? How are they built? Over the coming articles, we’ll do deep dives rigorously investigating these questions for a few features, starting with curves.

Our investigation into early vision has also left us with many broader open questions. To what extent do these feature families reflect fundamental clusters in features, versus a taxonomy that might be helpful to humans but is ultimately somewhat arbitrary? Is there a better taxonomy, or another way to understand the space of features? Why do features often seem to form in families? To what extent do the same features families form across models? Is there a “periodic table of low-level visual features”, in some sense? To what extent do later features admit a similar taxonomy? We think these could be interesting questions for future work.